Remember how your mother could tell if you were tired or feeling down just by hearing your voice over a long-distance phone call? While AI might not match a mother’s intuition, it’s getting closer to detecting a range of diseases by analyzing just a few seconds of speech.

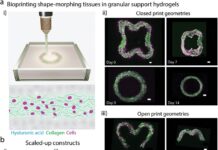

The US National Institutes of Health, in collaboration with the University of South Florida (USF), Cornell, and 10 other institutions, is gathering voice data through its Bridge2AI program to create AI tools that can diagnose diseases using speech patterns. The technology examines every aspect of a voice, from volume and tone to vocal cord vibrations and breathing patterns, to diagnose not only speech disorders but also conditions like neurological diseases, mental health issues, respiratory problems, and spectrum disorders such as autism.

“Voice has the potential to be a biomarker for numerous health conditions. Developing a robust framework that incorporates large datasets with cutting-edge technology will transform how we use voice as a tool for diagnosing diseases,” said Yaël Bensoussan, study lead and director of the USF Health Voice Center, in a press release.

We know that slurred speech is a warning sign of a stroke, but did you know that speaking slowly and in a low tone could indicate Parkinson’s disease? Researchers are even detecting cancer and depression through voice analysis. Maria Espinola, a psychologist and assistant professor at the University of Cincinnati College of Medicine that how people speak has long been a key indicator in diagnosing mental health disorders. “Depressed individuals often speak more monotone, with a flatter, softer voice, reduced pitch range, and lower volume, with more pauses,” she explained. On the other hand, anxiety patients “tend to speak faster and have difficulty breathing.” AI tools are also being used to identify schizophrenia and post-traumatic stress disorder (PTSD) through vocal characteristics.

“The current technology can identify features that the human ear can’t pick up. Between doctor visits, a lot can happen, and this technology offers a chance to improve continuous monitoring and assessment,” said Kate Bentley, clinical psychologist and assistant professor at Harvard Medical School.

In fact, a study published used an Indian cohort to detect type 2 diabetes with over 80% accuracy based on a 10-second voice clip. The system used phone recordings along with basic health data such as age, BMI, and gender to make the diagnosis.

However, there are concerns about potential misdiagnoses. Speaking in a low tone doesn’t always signify depression or Parkinson’s—other factors could be at play, and only a trained human professional can distinguish the cause behind certain vocal traits. AI may assist in screening, but it’s still far from providing definitive diagnoses.

As reported by timesofindia.indiatimes.com, there are also issues around voice data privacy and the need for diverse data sets to ensure that AI tools work equally well for patients of different nationalities, genders, and ages. “A very large, diverse, and robust set of data is essential,” Grace Chang, co-founder of Berkeley-based company Kintsugi. Kintsugi is developing voice-analysis technology for telehealth and call centers, helping identify patients who might benefit from further support. Using voice recordings from various languages worldwide, Kintsugi’s program could, for example, prompt a nurse to ask an overwhelmed parent about their well-being when they call about their colicky infant.

Health conditions AI can detect through voice analysis:

- Type 2 diabetes

- Parkinson’s, Alzheimer’s, stroke

- Depression, schizophrenia, bipolar disorders, PTSD

- Heart failure, COPD, pneumonia

- Autism and speech delays in children

- Laryngeal cancer, vocal fold paralysis